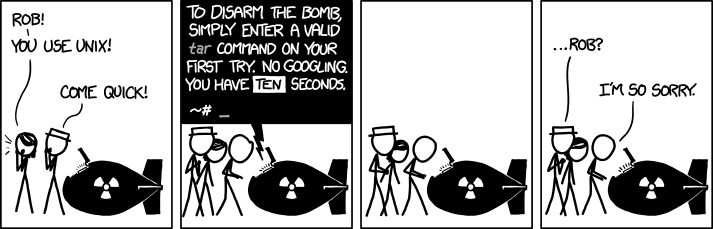

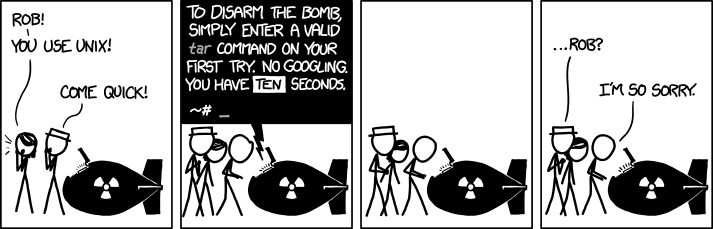

Oblig. XKCD:

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

sudo in Windows.Please report posts and comments that break these rules!

Oblig. XKCD:

tar -h

Edit: wtf... It's actually tar -?. I'm so disappointed

boom

tar eXtactZheVeckingFile

You don't need the v, it just means verbose and lists the extracted files.

tar -xzf

(read with German accent:) extract the files

Ixtrekt ze feils

German here and no shit - that is how I remember that since the first time someone made that comment

tar -uhhhmmmfuckfuckfuck

Zip makes different tradeoffs. Its compression is basically the same as gz, but you wouldn't know it from the file sizes.

Tar archives everything together, then compresses. The advantage is that there are more patterns available across all the files, so it can be compressed a lot more.

Zip compresses individual files, then archives. The individual files aren't going to be compressed as much because they aren't handling patterns between files. The advantages are that an error early in the file won't propagate to all the other files after it, and you can read a file in the middle without decompressing everything before it.

Obligatory shilling for unar, I love that little fucker so much

unar <yourfile>unzip doesn'tgonna start lovingly referring to good software tools as “little fuckers”

Where .7z at

On windows.

.7z gang, represent

When I was on windows I just used 7zip for everything. Multi core decompress is so much better than Microsoft's slow single core nonsense from the 90s.

cool people use zst

You can't decrease something by more than 100% without going negative. I'm assuming this doesn't actually decompress files before you tell it to.

Does this actually decompress in 1/13th the time?

Yeah, Facebook!

Sucks but yes that tool is damn awesome.

Meta also works with CentOS Stream at their Hyperscale variant.

Makes sense. There are actual programmers working at facebook. Programmers want good tools and functionality. They also just want to make good/cool/fun products. I mean, check out this interview with a programmer from pornhub. The poor dude still has to use jquery, but is passionate to make the best product they can, like everone in programming.

When I'm feeling cool and downloading a *.tar* file, I'll wget to stdout, and tar from stdin. Archive gets extracted on the fly.

I have (successfully!) written an .iso to CD this way, too (pipe wget to cdrecord). Fun stuff.

Can someone explain why MacOS always seems to create _MACOSX folders in zips that we Linux/Windows users always delete anyway?

this is a complete uneducated guess from a relatively tech-illiterate guy, but could it contain mac-specific information about weird non-essential stuff like folder backgrounds and item placement on the no-grid view?

I'm the weird one in the room. I've been using 7z for the last 10-15 years and now .tar.zst, after finding out that ZStandard achieves higher compression than 7-Zip, even with 7-Zip in "best" mode, LZMA version 1, huge dictionary sizes and whatnot.

zstd --ultra -M99000 -22 files.tar -o files.tar.zst

I use .tar.gz in personal backups because it's built in, and because its the command I could get custom subdirectory exclusion to work on.

7z gang joined the chat.....

Mf’ers act like they forgot about zstandatd

Me removing the plastic case of a 2.5' sata ssd to make it physically smaller

.tar.gz

all the cool kids use .cab

Can we please just never use proprietary rar ever. We have 7z, tar.gz, and the classic zip

I use the command line every day, but can't be bothered with all the compression options of tar and company.

zip -r thing.zip things/ and unzip thing.zip are temptingly more straightforward.

Need more compression? zip -r -9 thing.zip things/. Need a faster option? Use a smaller digit.

"yes i would love to tar -xvjpf my files"

-- statement dreamed up by the utterly insane

Zip is fine (I prefer 7z), until you want to preserve attributes like ownership and read/write/execute rights.

Some zip programs support saving unix attributes, other - do not. So when you download a zip file from the internet - it's always a gamble.

Tar + gzip/bz2/xz is more Linux-friendly in that regard.

Also, zip compresses each file separately and then collects all of them in one archive.

Tar collects all the files first, then you compress the tarball into an archive, which is more efficient and produces smaller size.