this post was submitted on 10 Mar 2024

269 points (92.7% liked)

Climate - truthful information about climate, related activism and politics.

5194 readers

1066 users here now

Discussion of climate, how it is changing, activism around that, the politics, and the energy systems change we need in order to stabilize things.

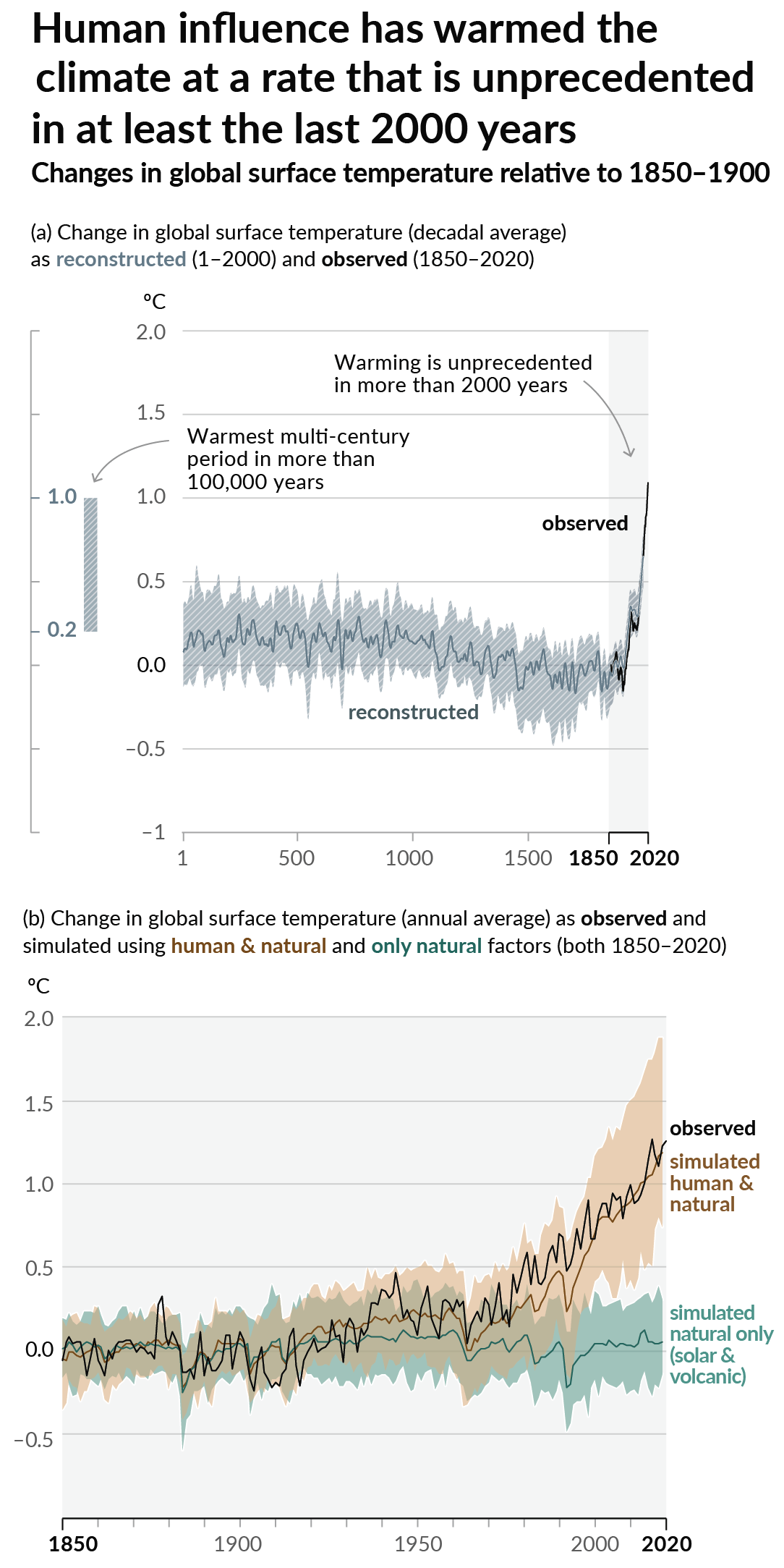

As a starting point, the burning of fossil fuels, and to a lesser extent deforestation and release of methane are responsible for the warming in recent decades:

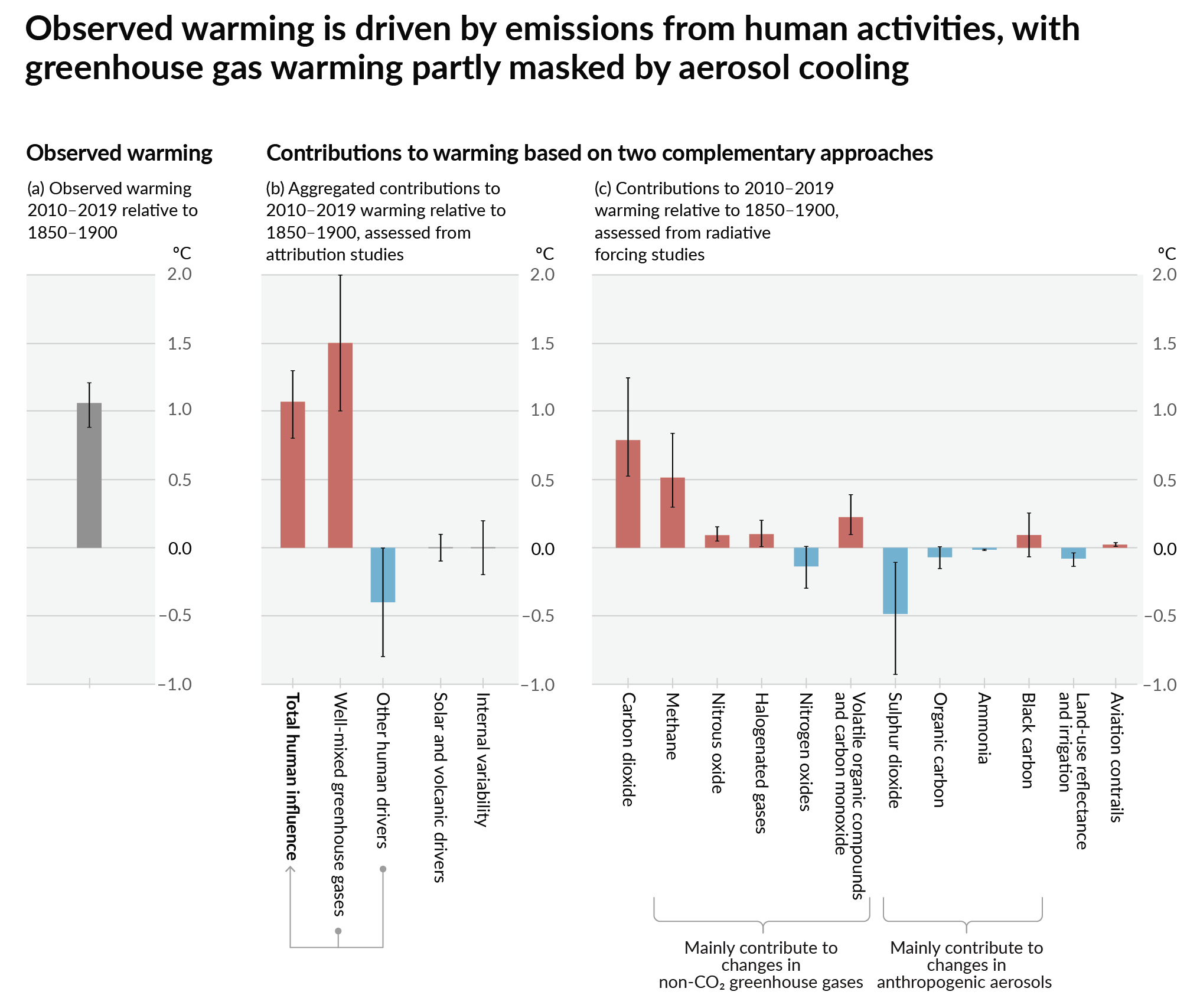

How much each change to the atmosphere has warmed the world:

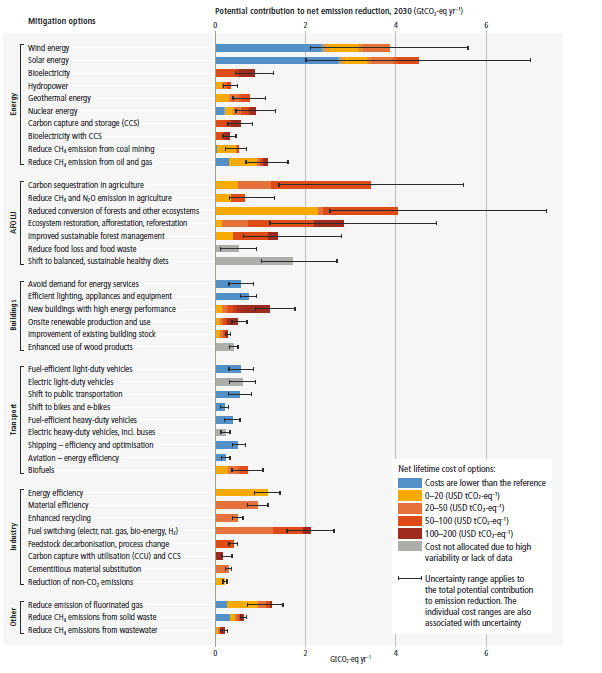

Recommended actions to cut greenhouse gas emissions in the near future:

Anti-science, inactivism, and unsupported conspiracy theories are not ok here.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

If you amortize training costs over all inference uses, I don't think 1000MW is too crazy. For a model like GPT3 there's likely millions of inference calls to split that cost between.

Sure, and I think that these may even be useful and it warrants the cost. But it is to just say that this still isn’t simply running a couple light bulbs or something. This is a major draw on the grid (but likely still pales in comparison to crypto farms).

Note that most people would be better off using a model that’s trained for a specific task. For example, training image recognition uses vastly less energy because the models are vastly smaller, but they’re exceedingly excellent at image recognition.

The article claims 200M ChatGPT requests per day. Assuming they make a new version yearly, that's 73B requests per training. Spreading 1000MW across 73B requests yields a per-request amortized cost of 0.01 watt. It's nothing.

47 more households-worth of electricity just isn't a major draw on anything. We add ~500,000 households a year from natural growth.