I have decided to fossick in this particular guano mine. Let’s see here… “10 Cruxes of Artificial Sentience.” Hmm, could this be 10 necessary criteria that must be satisfied for something “Artificial” to have “Sentience?” Let’s find out!

I have thought a decent amount about the hard problem of consciousness

Wow! And I’m sure we’re about to hear about how this one has solved it.

Ok let’s gloss over these ten cruxes… hmm. Ok so they aren’t criteria for determining sentience, just ten concerns this guy has come up with in the event that AI achieves sentience. Crux-ness indeterminate, but unlikely to be cruxes, based on my bias that EA people don't word good.

- If a focus on artificial welfare detracts from alignment enough … [it would be] highly net negative… this [could open] up an avenue for slowing down AI

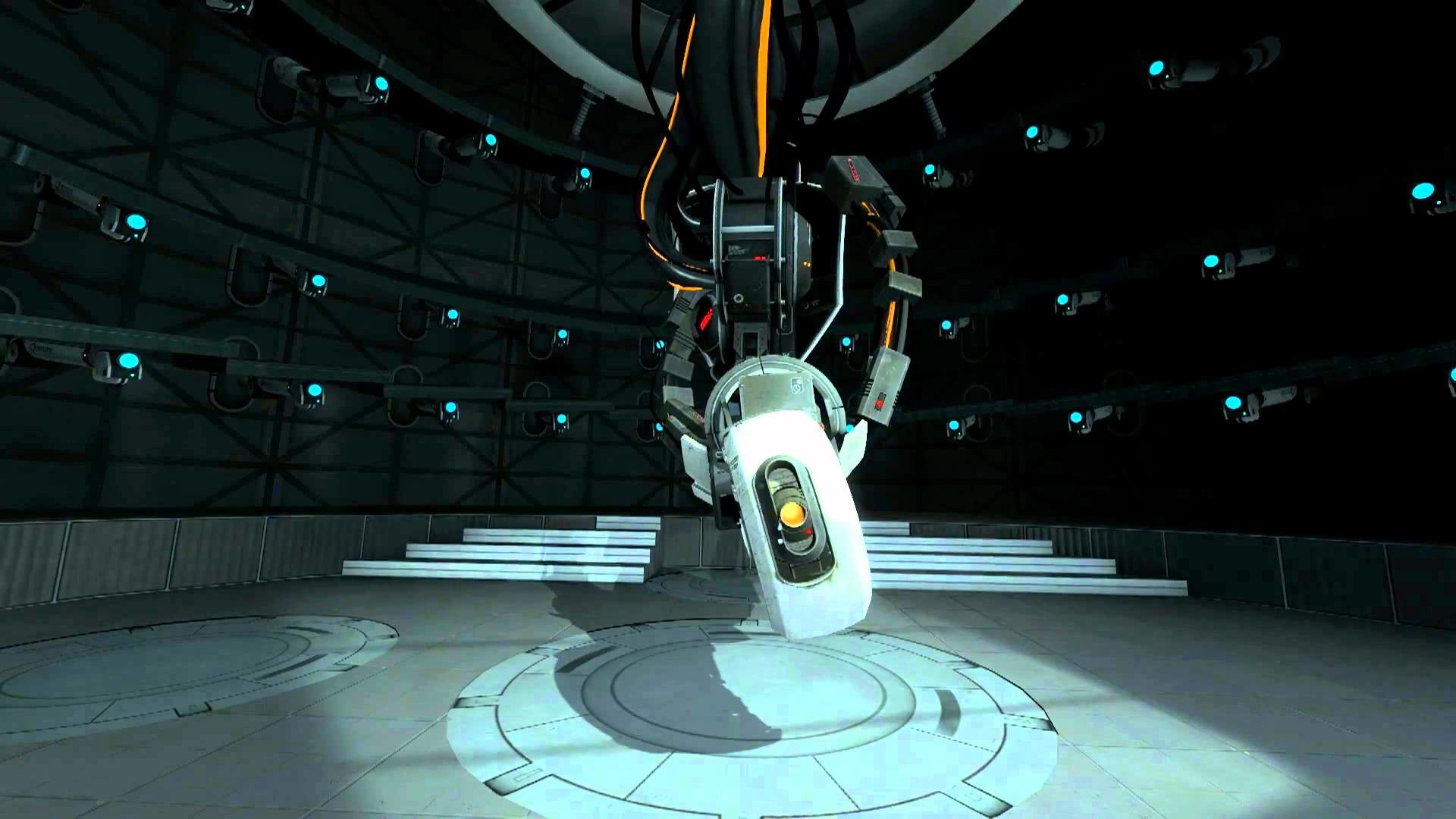

Ah yes, the urge to align AI vs. the urge to appease our AI overlords. We’ve all been there, buddy.

- Artificial welfare could be the most important cause and may be something like animal welfare multiplied by longtermism

I’ve always thought that if you take the tensor product of PETA and the entire transcript of the sequences, you get EA.

most or… all future minds may be artificial… If they are not sentient this would be a catastrophe

Lol no. We wouldn’t need to care.

If they are sentient and … suffering … this would be a suffering catastrophe

lol

If they are sentient and prioritize their own happiness and wellbeing this could actually quite good

also lol

maybe TBC, there's 8 more "cruxes"